The rise of AI chatbots in mental health care has UK experts both intrigued and concerned. While these digital companions promise 24/7 support and shorter therapy wait times, mental health professionals are raising red flags about their limitations.

Let’s face it – robots aren’t exactly known for their emotional intelligence.

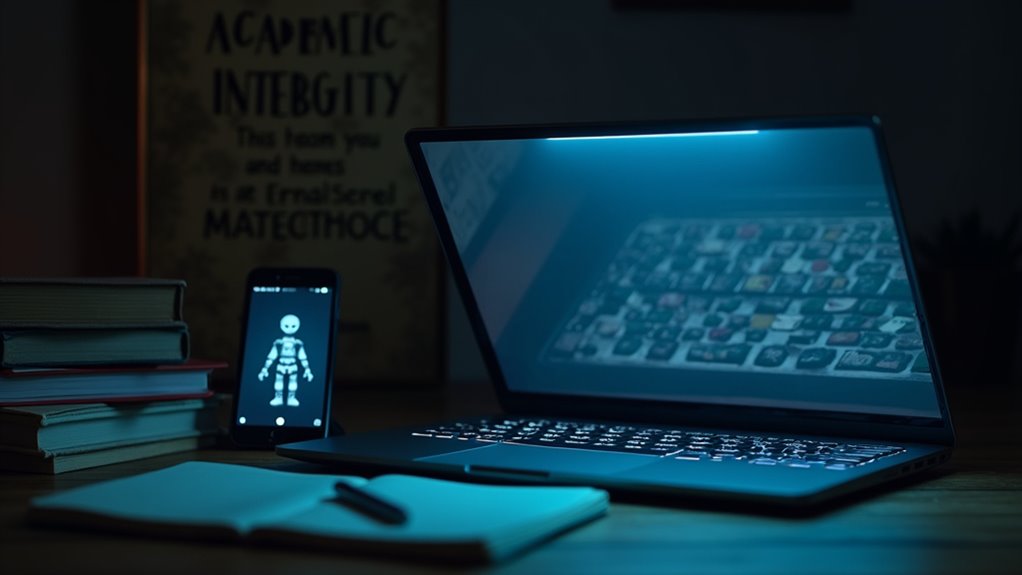

While AI can crunch numbers and analyze data, it’s still learning the basics of human feelings and connections.

The numbers look promising on paper. AI chatbots are projected to slash therapy wait times by half by 2025, and they’re making mental health support accessible to people in areas where human therapists are scarce. They never sleep, never take vacations, and never get tired of listening to the same story twice. Sounds perfect, right?

Not so fast. UK experts are particularly worried about these chatbots’ inability to pick up on subtle emotional cues. Sure, they can process data and spit out personalized responses, but they can’t replicate the nuanced empathy of a human therapist. The experts warn that user dependency on AI systems could become a serious concern. The black box algorithms powering these chatbots make it difficult to understand how they arrive at their responses.

And here’s the kicker – studies suggest these AI companions might actually increase feelings of loneliness. Talk about irony.

Privacy concerns are another major headache. These chatbots collect massive amounts of sensitive personal data, and the regulatory framework hasn’t quite caught up with the technology. With over 20,000 wellbeing apps currently available on app stores, the potential for data breaches is staggering. It’s like giving your diary to a stranger who promises they’ll keep it safe, but their security system is still under construction.

The integration of AI companions into healthcare systems continues despite these concerns. They’re being used as clinical support tools, helping human therapists gather insights and track patient progress.

But experts insist they should complement, not replace, human care providers. Because let’s be honest – would you rather pour your heart out to a sophisticated algorithm or a human who can actually understand what it feels like to have a bad day?

As international collaboration grows to establish standards for AI in mental health care, UK experts emphasize the need for better public education about these tools’ capabilities and limitations.

After all, an AI chatbot might be great for practicing difficult conversations, but it won’t be sending you a sympathy card anytime soon.